RL Weekly 38: Clipped objective is not why PPO works, and the Trap of Saliency maps

Published

Dear readers,

Happy holidays! Right after NeurIPS 2019, now the ICLR 2020 results are out! Here are two papers accepted to ICLR 2020 that caught my attention.

I wait for your feedback, either by email or a feedback form. Your input is always appreciated.

- Ryan

Implementation Matters in Deep RL: A Case Study on PPO and TRPO

What it says

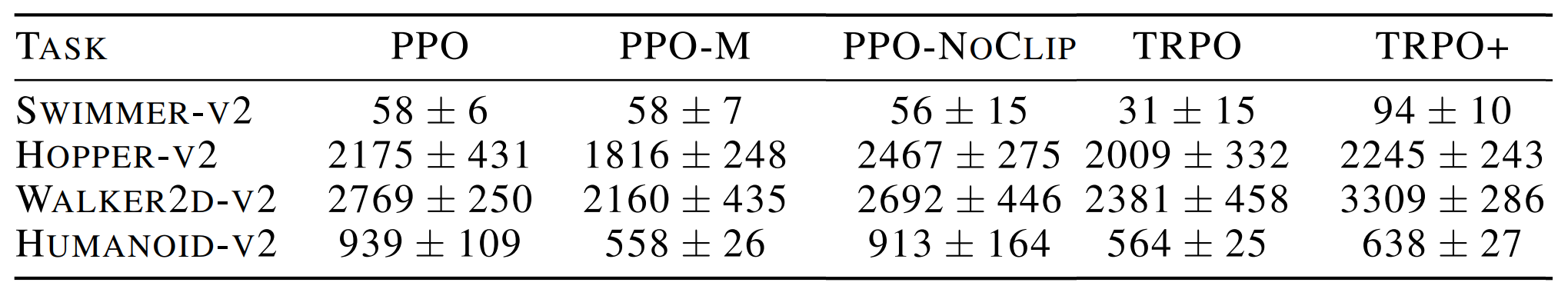

Proximal Policy Optimization (PPO) has been used over Trust Region Policy Optimization (TRPO) due to its simplicity and performance. However, compared to TRPO, PPO has many more “code-level optimizations” such as value function clipping, reward scaling, learning rate annealing, etc. These optimizations make PPO difficult to reproduce, as they are only briefly mentioned in the paper or only mentioned in the code. The authors find that these techniques are actually critical to PPO. In an ablation study, the code-level optimizations have a bigger impact on performance than the clipped objective. Furthermore, the experiments show that these optimizations are also crucial to maintaining the trust region.

Read more

External resources

- Trust Region Policy Optimization (arXiv Preprint)

- Proximal Policy Optimization Algorithms (arXiv Preprint)

- OpenAI Baselines (GitHub Repo)

Exploratory Not Explanatory: Counterfactual Analysis of Saliency Maps for Deep RL

What it says

Saliency maps have been used in RL for qualitative analysis. For example, in Atari Breakout, saliency maps have been shown as evidence for the claim that the agent has found the “tunnel” strategy (clearing small column of bricks) In Figure (a) above, the saliency map is red near the tunnel, denoting high salience. However, the authors find that just by moving the tunnel horizontally, the saliency pattern disappears.

The authors argue that saliency maps have been used in a subjective manner that cannot be falsified with experiments and perform case studies with saliency maps on Atari Breakout and Atari Amidar. The authors conclude that saliency maps should not be used to infer explanations, but as an exploratory tool to formulate hypothesis.

Read more

Here are some more exciting news in RL:

OpenAI Five

The whitepaper for OpenAI Five, the Dota 2 AI system that defeated the best human team, has been uploaded to arXiv.

The PlayStation Reinforcement Learning Environment (PSXLE)

A new environment suite made by modifying a PlayStation emulator.

Learning Human Objectives by Evaluating Hypothetical Behavior

Model the reward function by generating trajectories and asking users to label synthesized transitions with reward.

Never miss an issue of RL Weekly from us, subscribe to our newsletter

Never miss an issue of RL Weekly from us, subscribe to our newsletter