RL Weekly 37: Observational Overfitting, Hindsight Credit Assignment, and Procedurally Generated Environment Suite

Published

Dear readers,

Happy NeurIPS! This week, I have made my summaries more concise to improve the reading experience. I hope that this change makes the newsletter easier to digest.

I wait for your feedback, either by email or a feedback form. Your input is always appreciated.

- Ryan

Observational Overfitting in Reinforcement Learning

What it says

Observational overfitting is a phenomenon “where an agent overfits due to properties of the observation which are irrelevant to the latent dynamics of the MDP family.” For example, in the saliency map above, the score and the background objects are highlighted red as they are deeply correlated with progress. This could hinder generalization: the authors report that simply covering the scoreboard with a black rectangle during training resulted in a 10% increased test performance. The authors use a Linear Quadratic Regulator (LQR) to validate the phenomenon, and find that overparametrizing potentially helps as a form of “implicit regularization.” The authors also try ImageNet networks (AlexNet, Inception, ResNet, etc.) on CoinRun environments, and show that overparametrization improves generalization to the test set.

Read more

Hindsight Credit Assignment

What it says

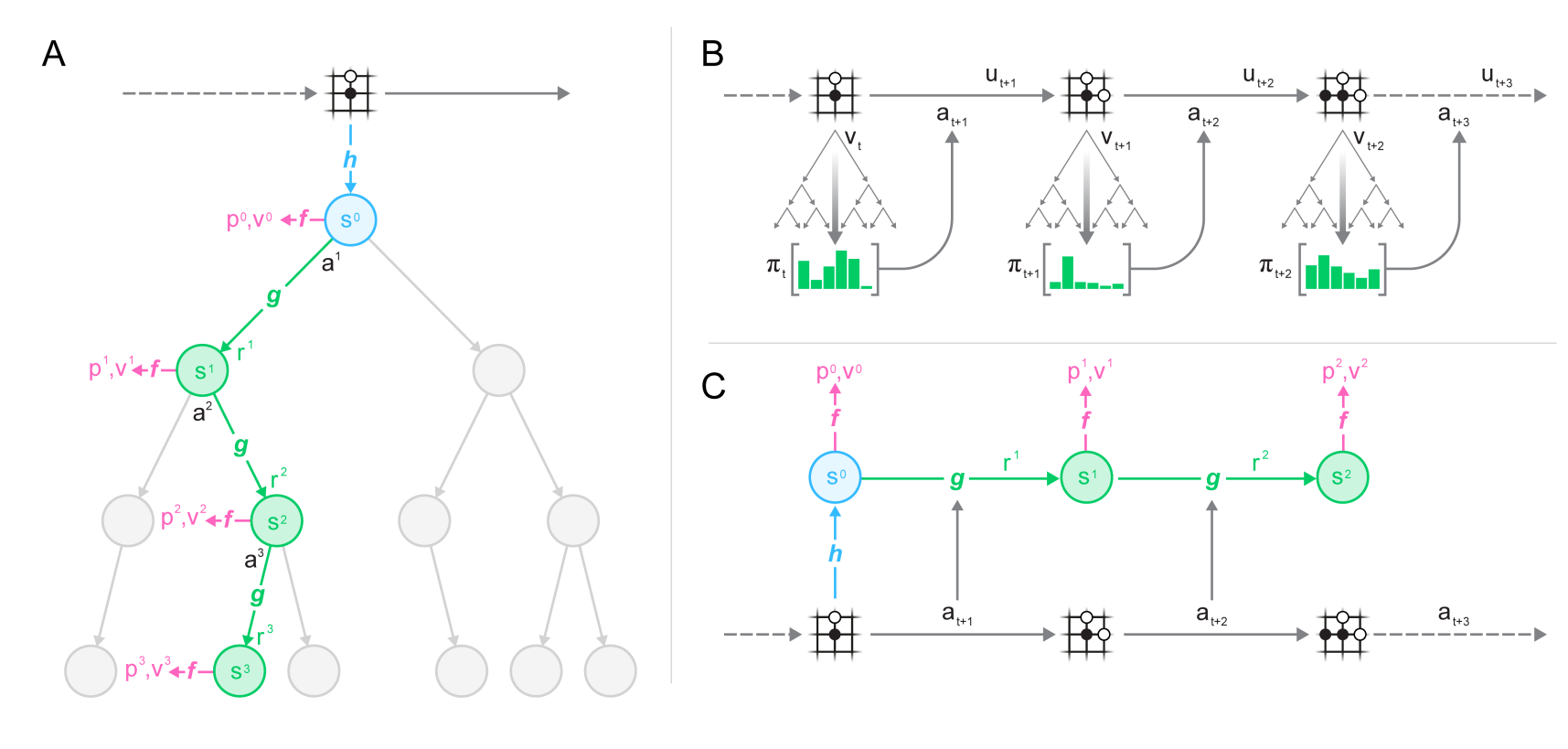

Estimating the value function is a critical part of RL, as it quantifies how choosing an action in a state affects future return. The reverse of this is the credit assignment question: “given an outcome, how relevant were past decisions?” The authors define the “hindsight distribution” of an action as the conditional probability of the first action of the trajectory being that action over trajectories given some outcome (either state-dconditional or return-conditional). This learned hindsight distribution can be used to better estimate value functions or policy gradients. The authors validate new algorithms that use Hindsight Credit Assignment in a few diagnostic tasks.

Read more

Leveraging Procedural Generation to Benchmark Reinforcement Learning

What it says

OpenAI (Cobbe et al.) released a set of 15 new environments similar to the CoinRun environment released last year, where the environments are “procedurally generated.” Having content procedurally generated in many aspects (level layout, game assets, entity spawn location and timing, etc.) encourages the agent to learn a policy robust to such variations. Procedurally generated environments also allow for a natural division of training and test set by generating different environments.

Read more

External resources

- Quantifying Generalization in Reinforcement Learning (arXiv Preprint): The original paper for CoinRun

- Obstacle Tower: A Generalization Challenge in Vision, Control, and Planning: Obstacle Tower, a 3D procedurally generated environment by Unity

Here are some more exciting news in RL:

Reinforcement Learning: Past, Present, and Future Perspectives

The recording of a NeurIPS 2019 presentation on RL by Katja Hofmann (Microsoft Research) is available online.

Stable Baselines - Reinforcement Learning Tips and Tricks

Stable Baselines, a major well-maintained fork of OpenAI Baselines, released a set of tips and tricks for RL.

Winner Announced for NeurIPS 2019: Learn to Move - Walk Around

The winners for each track of the NeurIPS 2019: Learn to Move - Walk Around was announced.

New State-of-the-art for Hanabi

Facebook AI wrote a blog post on how they build a new bot that achieves state-of-the-art in Hanabi, a collaborative card game.

Never miss an issue of RL Weekly from us, subscribe to our newsletter

Never miss an issue of RL Weekly from us, subscribe to our newsletter